Penetration Testing With Shellcode: Hiding Shellcode Inside Neural Networks

- Apr 15, 2019

- Shawn Evans

NopSec commonly needs to bypass anti-virus / anti-malware software detection during our penetration testing engagements, which leads us to spend time researching methods and techniques that could be effective at avoiding detection by anti-virus / anti-malware products. One interesting research project we had spun out of these efforts is a technique of hiding payload shellcode inside of neural networks. In the process of conducting this research we have developed a rough proof-of-concept encoder we call “ENNEoS”, or “Evolutionary Neural Network Encoder of Shenanigans”.

Before we dig into what exactly ENNEoS does, let’s cover a bit of background. Typically when penetration testers talk about shellcode we mean a small piece of computer code that – when executed – will give us a “shell” (i.e. interactive command line) on the target computer system. This is only one use of shellcode, as it could also be used to execute a non-interactive command on the target host. Shellcode is often small, and is capable of pulling down additional functionality from the command and control server it connects to. Needless to say, anti-virus / anti-malware vendors spend enormous resources to improve their detection of shellcode, particularly common shellcodes that are generated by off-the-shelf tools. As penetration testers, we often need to use these off-the-shelf tools due to time constraints on an engagement or to prove that low-hanging-fruit attacks can be executed. Creating custom shellcode for each engagement simply is not economical for the majority of short-term engagements we perform.

The reason we were interested in hiding shellcode inside of a neural network is because neural networks have an interesting attribute of being incredibly opaque. They’re essentially a blackbox to humans. We can see the inputs going in, and the outputs coming out, but understanding the inner workings of neural networks is an incredibly difficult task. This is generally considered as a negative attribute for applying neural networks as solutions, as engineers and scientists have a hard time predicting precisely how a neural network will behave, limiting the problems they can be applied to. This attribute is however very convenient for penetration testers trying to hide something.

This technique may be a bit overkill for defeating anti-virus / anti-malware, however if you’re interested in really making reverse engineering efforts difficult for CIRT (Computer Incident Response Team) analysts this could be just the technique you’re looking for. It is possible to actually encode multiple shellcodes into the same set of neural networks, with different “triggers” to get the neural networks to output them. This prospect could potentially make reverse engineering efforts more difficult. The reverse engineering analyst might not be able to determine if they’ve acquired the “real” malicious payload from the neural networks, or have retrieved a diversion shellcode with a simpler trigger.

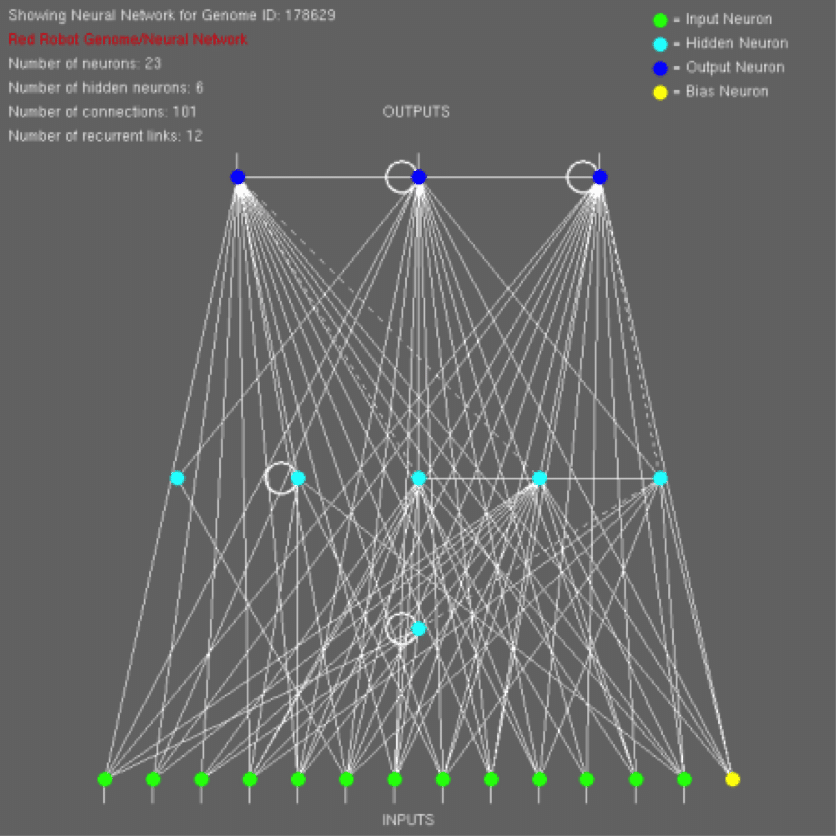

The ENNEoS encoder is written in C++, and uses genetic algorithms to evolve the neural networks. The behavior of a neural network isn’t coded by a developer: it is trained instead. The ENNEoS system uses genetic algorithms to perform this training. So what is a neural network? It is essentially a small scale simulation of a brain. Or at least a model that was inspired by how brains function. In the figure below you can see a simple visualization of a relatively small neural network. The circles you see in the image are the neurons of the network. The lines between the neurons are the links, similar to the connections in your brain that carry electrical impulses. The neural network also has weights that affect the “electrical impulses” as they move through the network. You can think of this simple neural network almost like a virtual machine, just one that runs a brain instead of a Von Neumann computer system.

The benefits of using a genetic algorithm is that it can evolve not only the weights of the neural network, but it can also evolve the neurons and the links between them. Going back to our virtual machine metaphor, think of the neurons and links as our virtual machine “hardware”, and the weights as the “software”. The genetic algorithm can design both the hardware and the software for you.

In the above figure you see the green neurons at the bottom are the “input” neurons, and the dark blue neurons at the top are the “output” neurons. In the ENNEoS application, an example input might be a passphrase. When the passphrase is correct, the desired shellcode is output by the dark blue neurons. For performance reasons, the ENNEoS encoder is designed to create multiple neural networks, breaking the shellcode into chunks so that the neural network only needs to output a few of the required characters of the overall shellcode.

While the underlying software of the encoder is quite complicated, how you use this technique is incredibly simple. ENNEoS is not a tool in its current state; instead it is a working proof-of-concept. This means that if you want to pick it up and use it for your research, you will need to write a bit of C++ code. The piece of code you would need to create is called a “fitness function”, and it’s how you would drive the genetic algorithm to do your bidding. The genetic algorithm in ENNEoS works by creating a large population of neural networks, stimulating them with inputs (for example correct and incorrect passwords), and recording the output of each neural network. The outputs are tested to see how “correct” they are for the desired shellcode. A new generation of neural networks is created from the first, with the better performing neural networks being allowed to produce more and pass along their genes to the next generation. This is survival of the fittest at its best.

The trick at this point is that you have to define what constitutes “fittest” in the darwinian sense. The fitness function is what accomplishes this result. It simply provides a “score” for a specific neural network based on its performance, and how close it came to your desired shellcode. That’s pretty much it: you really only have to create a scoring system that rewards the behavior you desire. The genetic algorithm, and a healthy dose of CPU cycles, handles the rest. Once you have your encoded shellcode neural networks, creating a loader to push inputs in and executing the obtained shellcode is a simple task.

If you’re interested in giving ENNEoS a try in its current rough form, you can find the source code on my github at:

https://github.com/hoodoer/ENNEoS

If you’re interested in a live-demo of ENNEoS, I will be presenting the research in May at HackMiami and in early June at Cackalacky Con. We hope to see you there.